|

Research based insights for Mastering the Art of Visual Storytelling

|

Try it here!

Try it here!

Try it here!

|

03:03

|

|

UX Hot Take on the OpenAI Keynote Announcements.

|

|

04:58

|

|

Meta's Vision: Blurring the Lines Between Reality and the Digital Frontier

|

|

04:36

|

|

The business value of designHow do the world's most powerful players use design to double the value of their product ... |

|

05:41

|

|

Great products do less, but betterWhy adding more features could mean you are heading the wrong way... |

|

03:02

|

|

The design mistakes we continue to makeRevising the famous book "Don't make me think" - what are the mistakes we keep on making? |

|

03:11

|

|

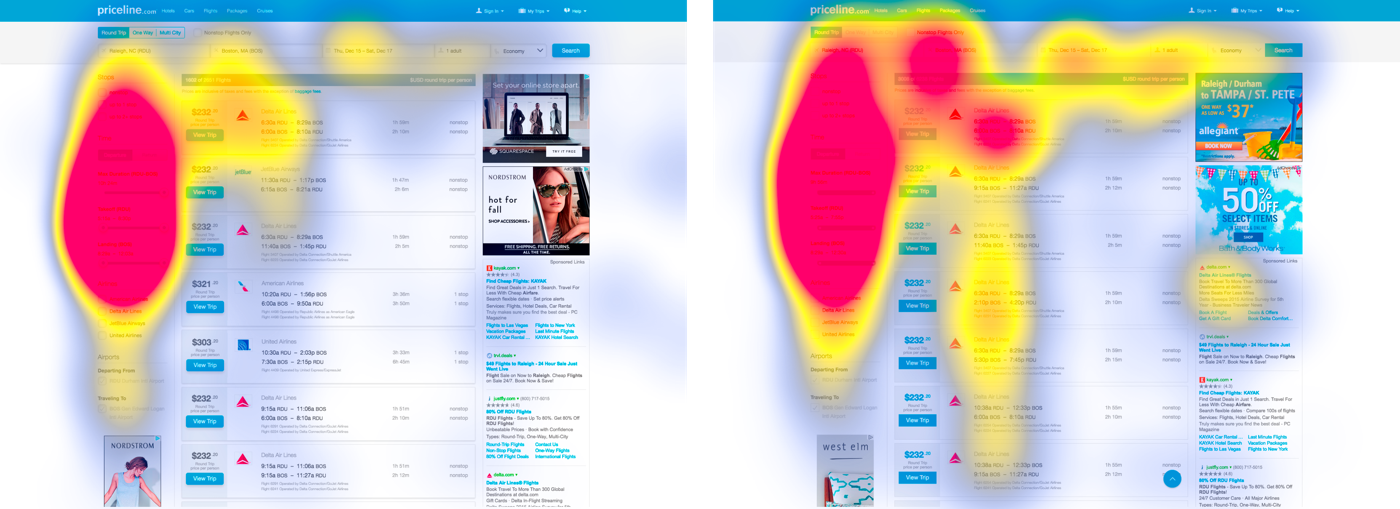

Flat UI Elements Attract Less Attention and Cause UncertaintyA study was conducted to test eye-tracking and comparing different kinds of click-ability cues. The ... |

|

02:03

|

|

The researcher's journey: leveling up as a user researcher

|

|

02:52

|

Commute

Sports

Dog walking

Cleaning

Errands

Too busy? We respect your time! To make sure you don’t miss a bit, you’ll be able to read or listen and schedule to listen later.